The Man Who Made Machines Talk

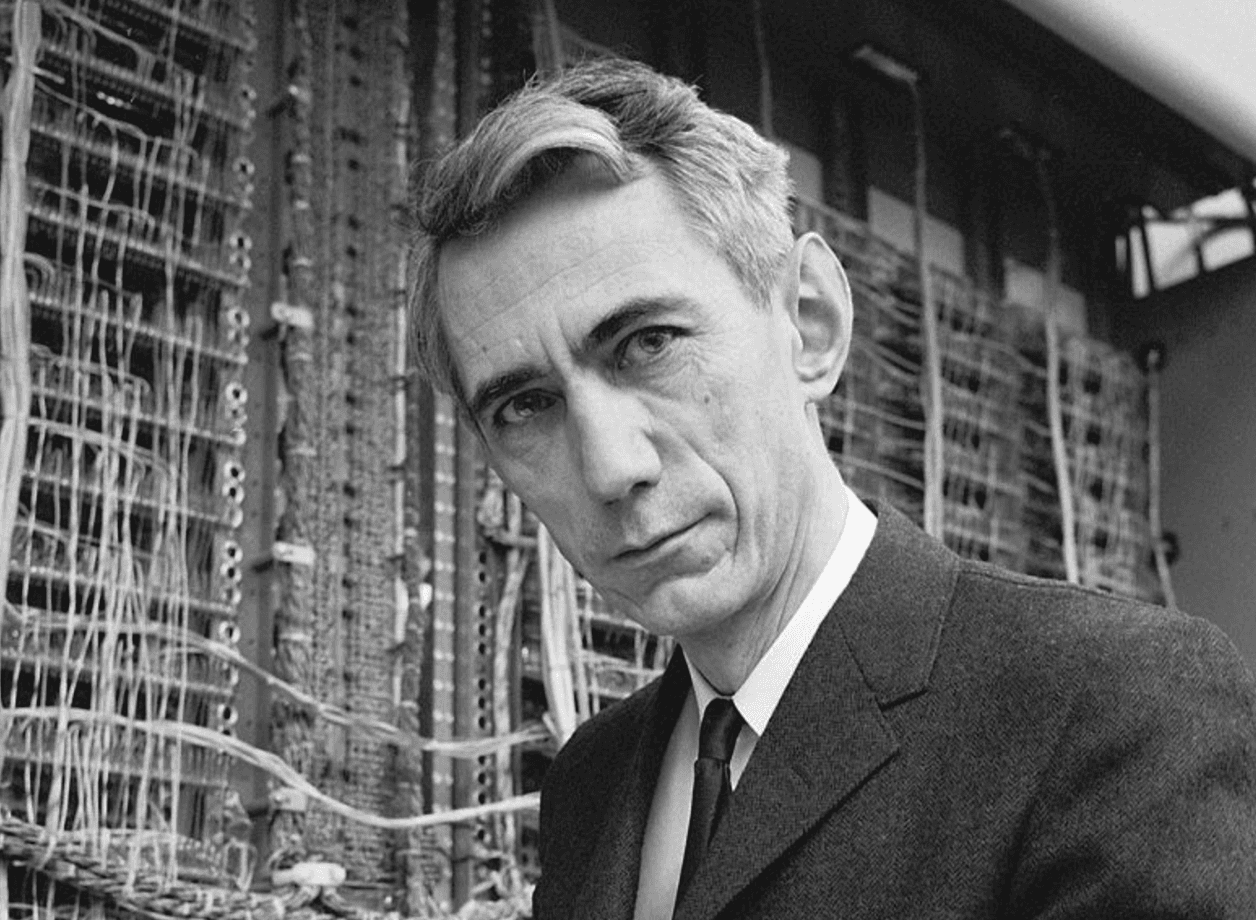

Visionary and pioneer, Claude Shannon laid the foundations of the digital era by proving that information could be encoded in ones and zeros. His work revolutionised telecommunications, computing, and artificial intelligence, fundamentally transforming the way we communicate.In a small town in Michigan called Gaylord, on 30 April 1916, the man who would change the way we communicate was born. Claude Shannon grew up in an environment that nurtured his natural curiosity. His father, a judge by profession, was also an amateur inventor, while his grandfather had been a self-taught farmer and builder.

As a child, Shannon displayed a fascination with mechanisms and how things worked. He built model aeroplanes, assembled homemade radios, and even created a telegraph system using barbed wire to communicate with a friend who lived half a mile away.

His early education took place in Gaylord, where he excelled not only in mathematics and science but also as a talented athlete.

Inheriting his family's inventive spirit, at the age of 16 he secured his first significant job as a messenger for Western Union. This experience introduced him to the world of telegraphic communication for the first time.

In 1932, Shannon enrolled at the University of Michigan, initially uncertain whether to study mathematics or electrical engineering. His solution was to study both simultaneously—a decision that would prove to be prophetic. Years later, his ability to integrate these disciplines would change the world.

To fund his studies, he worked as an assistant in the Department of Electrical Engineering, where he maintained a primitive analogue computer known as a Differential Analyser.

The Beginning of a Revealing Path

A telling anecdote from his university years occurred when he realised that the light switch system in the engineering building followed a sequence that could be expressed through Boolean algebra. This seemingly trivial discovery planted the seed for what would later become his master's thesis at MIT.

In 1937, at just 21 years old, Shannon defended his master's thesis at MIT, unaware that he was about to revolutionise the world. He demonstrated that computers could "think" using only two states: on and off. This seemingly simple idea sparked a revolution that today enables instant messaging, video streaming, and internet browsing.

Shannon was not the archetypal genius detached from the world. Beyond being a brilliant mathematician, he built eccentric machines in his spare time—a device that solved the Rubik’s Cube, a mechanical mouse that learned to escape mazes, and even a machine that juggled. This unique blend of playful curiosity and mathematical rigour led him to make fundamental discoveries.

A little-known anecdote perfectly illustrates his way of thinking. During his time at Bell Laboratories, Shannon often played with a ping-pong ball, bouncing it against a wall. One day, a colleague asked why he was wasting time. Shannon replied that he was studying bounce patterns to better understand how information "bounces" in a communication system. His ability to uncover deep connections in everyday situations was one of his greatest strengths.

The Father of Information Theory

During the Second World War, while working on anti-aircraft defence systems, Shannon became obsessed with a seemingly abstract question: what is information? In 1948, he published his answer in what would become one of the most influential papers in the history of technology: A Mathematical Theory of Communication. In it, he explained that all information—whether a Beethoven symphony or a family photograph—could be reduced to a sequence of ones and zeros.

Why was this revolutionary? Before Shannon, transmitting information was like sending a message in a bottle—one could never be certain it would arrive intact. His theory allowed us to quantify precisely how much information could be sent through a communication channel and how to do so without errors. It was as if he had created a blueprint for constructing perfect digital highways.

Shannon also solved a problem that had plagued early computer designers: how to build machines capable of performing reliable logical operations. He demonstrated that using simple electrical switches (on/off), all the necessary operations for computation could be performed. This discovery is why today we have computers the size of a wristwatch.

Shannon did not work in isolation. At Bell Laboratories, where he spent much of his career, he exchanged ideas with figures such as Norbert Wiener, with whom he explored the principles of cybernetics. He also corresponded with Alan Turing, sharing insights on cryptography and the future of computing. These dialogues played a crucial role in shaping artificial intelligence and digital security.

Despite his genius, Shannon was known for his humility and sense of humour. At work, he would often ride a unicycle through the corridors while juggling. His colleagues described him as someone who could explain the most complex ideas with astonishing clarity.

His legacy extends far beyond mathematics or engineering. Every time we send an emoji, upload a photo to the cloud, or make a video call, we are applying the principles he developed. In a world where information is power, Shannon gave us the tools to master it.

He passed away in 2001 after a long battle with Alzheimer's. Ironically, while the disease erased his memories, it could not erase the impact of his ideas, which remain more relevant today than ever.

In the era of artificial intelligence and big data, the principles Shannon established continue to underpin the digital future.