The Eyes and Ears of Artificial Intelligence

Multimodal AI is revolutionising how machines perceive and understand the world. By integrating text, images, audio, and more, these systems promise deeper, more versatile intelligence. But what challenges must they overcome to reach their full potential?In a Madrid laboratory, María, an oncology researcher, sits at her computer and loads a complex breast tissue image. Alongside it, she uploads an audio file containing her verbal notes on preliminary findings.

With a simple command, the system simultaneously analyses both the image and the audio, identifying suspicious patterns in the tissue that align with the descriptions in her notes. It then suggests a preliminary diagnosis with 94% confidence.

This is not a futuristic vision but a real-world application of multimodal neural networks, a technology that is transforming our interaction with artificial intelligence.

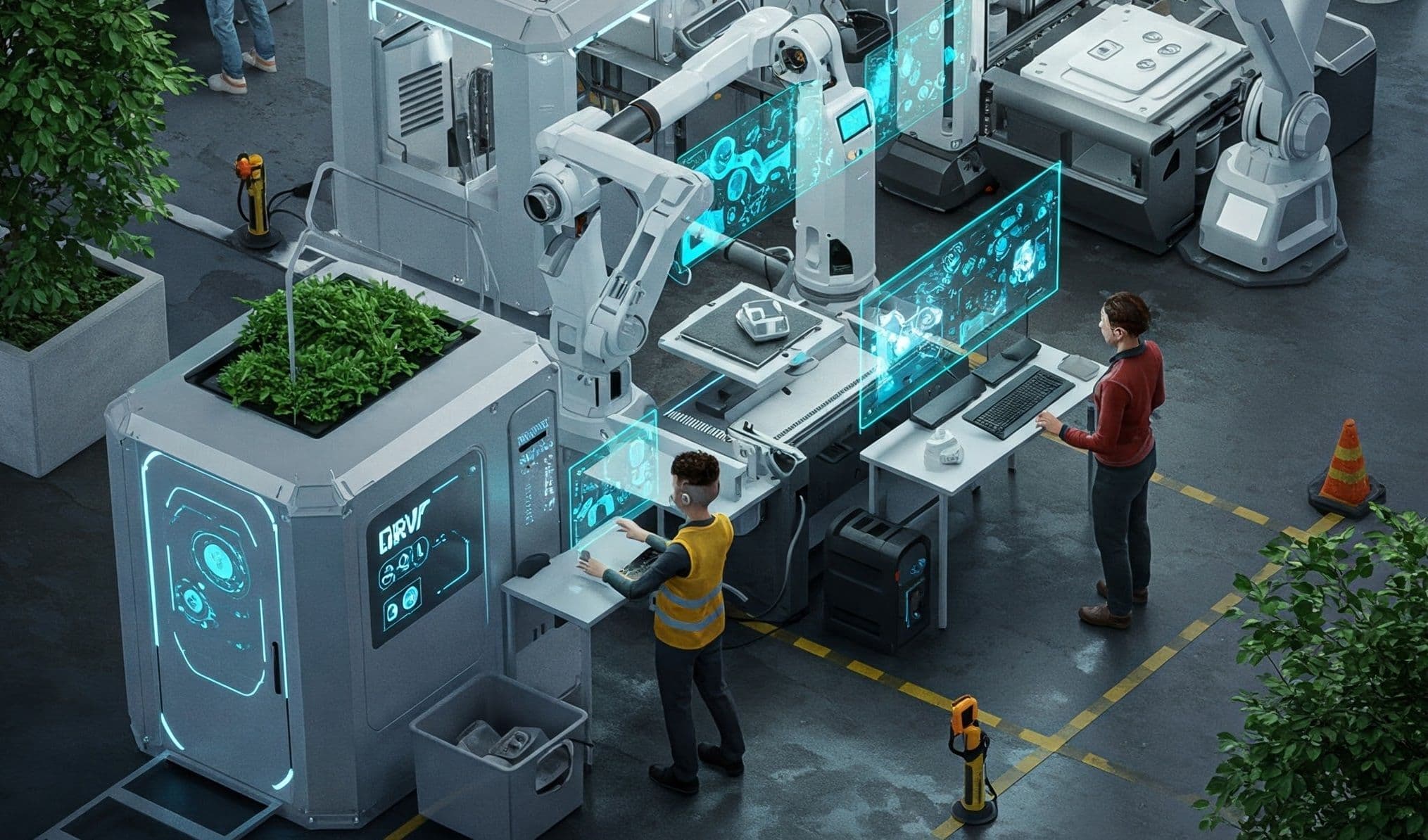

Unlike their predecessors, which processed only one type of data—be it text, images, or audio—these advanced architectures can simultaneously understand, correlate, and generate information from multiple sources, mirroring how humans perceive and interpret the world.

The Machinery Behind Artificial Sensory Integration

The operation of multimodal neural networks resembles a symphony orchestra, where each instrument (modality) contributes its unique sound, yet it is the harmonic integration that creates the true magic.

At their core, these networks employ a shared embedding architecture, where data from different modalities—high-definition images, audio processed through directional microphones, text digitised by advanced OCR, and even haptic signals—are first transformed into vector representations in latent spaces via specialised encoders.

This transformation allows seemingly incompatible data, such as pixels and sound waves, to communicate in a common mathematical language.

The real technological breakthrough occurs in the fusion layers, where bidirectional transformers, trained with cross-attention techniques, enable the network to establish deep correlations between modalities.

For instance, when a multimodal system processes a video of a person speaking alongside the corresponding audio, it not only sees lip movements and hears words but also learns the subtle synchronisations between the two, vastly improving its contextual understanding.

This fusion demands a robust computational infrastructure capable of executing trillions of operations per second, as well as specialised frameworks designed to manage the intricate computational graphs that emerge from these interactions.

An Ecosystem of Transformative Applications

The versatility of multimodal neural networks has sparked an explosion of innovative applications across multiple sectors.

In healthcare, multimodal systems are transforming pathological diagnosis by integrating microscopic images with clinical history data to detect biomarkers that would otherwise be imperceptible to the human eye. This has led to a marked improvement in diagnostic accuracy across various diseases.

In the automotive sector, companies such as Waymo and Cruise have implemented multimodal perception systems that merge data from LIDAR, infrared cameras, ultrasonic sensors, and HD maps to generate a comprehensive understanding of the road environment. This sensory integration enables autonomous vehicles to anticipate pedestrian behaviour with greater accuracy, potentially reducing accidents compared to human drivers in controlled conditions.

Retail has also been revolutionised by these technologies. Amazon Go leverages multimodal networks that integrate computer vision, weight sensors, and Bluetooth-based location tracking to create a seamless shopping experience.

Similarly, platforms like Pinterest have deployed visual-textual search engines that allow users to find products by uploading images and verbally describing desired modifications, increasing conversion rates by 36% compared to traditional methods.

In film production, companies such as Runway ML are democratising visual content creation with tools that generate or modify scenes based on textual descriptions, hand-drawn sketches, or sound references. This is radically transforming creative workflows and reducing production costs for independent studios.

Overcoming the Challenges

Despite their immense potential, multimodal neural networks face significant challenges, which researchers and industry leaders are actively addressing.

The computational demands of these systems, which often entail high energy and financial costs, are being tackled through strategies such as developing more efficient models, optimising specialised hardware, and leveraging federated learning to distribute computational loads. Technologies like neuromorphic chips and quantum computing also promise to drastically reduce energy consumption, making these tools more widely accessible in the future.

To bridge the technological divide that favours industry giants, open-source initiatives and collaborations between universities and emerging companies are fostering the development of accessible models without compromising quality. Platforms like Hugging Face and decentralised AI projects are enabling a broader range of players to participate in innovation.

The issue of the "curse of dimensionality"—where AI systems struggle with excessive variables or features, reducing efficiency—is being mitigated through advanced data representation techniques and the careful selection of diverse, balanced datasets. These solutions enhance multimodal systems' ability to process various types of information efficiently.

Bias mitigation tools are evolving to detect and correct potential inequities in datasets, ensuring fairer and more representative AI systems.

Interpretability, one of the most pressing challenges, is being addressed through advances in explainability techniques such as neural activation visualisations, symbolic-connectionist hybrid models, and Explainable AI (XAI) approaches. These developments allow AI models to be audited without sacrificing performance, paving the way for their adoption in regulated sectors such as healthcare and finance.

Finally, technological fragmentation is being reduced through the development of open standards and interoperable frameworks, spearheaded by organisations such as the IEEE and international AI consortia. Establishing common protocols for representation and evaluation will enable multimodal solutions to evolve cohesively, accelerating progress in the field and fostering global collaboration.

While challenges remain, the trajectory is clear: through innovation, collaboration, and strategic development, multimodal neural networks are steadily advancing towards their full potential, transforming industries and enhancing lives in multiple dimensions.

Convergence and Democratisation

The AI Business Trends 2025 report, recently published by Google Cloud, identifies multimodal artificial intelligence as the fastest-growing technological trend.

The figures are striking: the global multimodal AI market, valued at $2.4 billion in 2025, is projected to reach $98.9 billion by 2037—an exponential growth trajectory that underscores its transformative potential.

Google forecasts that this surge in multimodal AI will fundamentally reshape complex data analysis, offering unprecedented levels of contextualisation and personalisation.

What is most striking about the current landscape is the rapid shift from experimentation to real-world implementation. According to Travis Parker, Director of AI Strategy at Google Cloud, the business ecosystem has evolved rapidly within just a year, transitioning from single-model adoption to a multi-model strategy that integrates diverse capabilities. This shift reflects a more sophisticated approach, where organisations select different models for specific use cases, creating bespoke AI ecosystems.

The multimodal tools already available are elevating artificial intelligence to new heights, enabling systems to simultaneously process and interpret text, images, video, and audio. This multisensory capability is redefining what "understanding" means for AI.

As Parker observes, while early 2024 was marked by "intense experimentation but limited production," businesses are now beginning to integrate these capabilities. However, large-scale deployment will fully materialise in 2025.

We are witnessing the accelerated democratisation of technologies that are reshaping our interactions with computer systems. The question is no longer whether machines can perceive the world in ways similar to us, but rather how entrusting complex perceptual tasks to increasingly sophisticated AI will transform society.

The future is unfolding before our eyes at a pace that challenges our capacity to adapt, yet simultaneously invites us to reimagine the possibilities of human-machine collaboration in a world where artificial perceptual boundaries are constantly expanding.