Can Machines Understand Our Emotions?

Emotional Artificial Intelligence is revolutionising key sectors such as healthcare, education, and automotive, but it also raises ethical dilemmas concerning privacy and bias.As we stand on the brink of a technological revolution that challenges our deepest understanding of humanity, Emotional Artificial Intelligence (EAI) emerges as a breakthrough that redefines the boundaries of what we know.

Imagine Sara, a university student, engaging with a virtual psychological support assistant. But this is no ordinary chatbot—it detects micro-expressions, analyses vocal modulations, and processes speech content to gauge her true emotional state. It doesn’t just respond; it adapts its tone and reactions in real time, mirroring her mood.

This isn’t a scene from a science fiction novel but a glimpse into a technological reality that is fundamentally reshaping how we interact with machines. A report from Research and Markets predicts that this sector will grow by 11.73%, reaching £6.1 billion by 2030. This is not a passing trend but a revolution with far-reaching social implications.

From Binary to Empathy: The Evolution of Emotional Artificial Intelligence

EAI represents a qualitative leap from traditional artificial intelligence. While early computing systems relied on the binary logic of "0" and "1", EAI introduces emotional nuance, interpreting human emotions with unprecedented complexity.

Its technological architecture functions as an intricate network of interconnected systems. Advanced facial recognition detects micro-expressions lasting mere fractions of a second, revealing the emotional undertones of a human face. Multi-parametric voice analysis identifies tonal variations that convey emotions beyond spoken words. Meanwhile, cutting-edge natural language processing captures not only what is said but also what is implied—the unspoken nuances within a conversation.

However, EAI is not without its technical challenges. Accurately interpreting human emotions remains an extraordinarily complex task. How can a machine distinguish between a genuine smile and a forced one? How can cultural biases be eliminated when analysing facial expressions or voice tones? Current algorithms still struggle to fully grasp the vast diversity of human emotional expression.

Transforming Key Sectors

In customer service, traditional chatbots are becoming obsolete. The latest generation of virtual assistants does not merely resolve queries but also perceives users’ frustration, urgency, or calmness, adapting responses accordingly. This marks a fundamental shift in human-machine interaction.

The impact on mental health is another crucial area. EAI systems can detect early patterns of depression, anxiety, or autism spectrum disorders by analysing language and emotional expression. Their algorithmic objectivity allows for an initial assessment free from human biases, enabling early detection that could save lives.

Education is also evolving. Adaptive learning platforms are no longer static information repositories but dynamic environments that respond to students’ emotional states. A student struggling with a mathematical concept receives a different explanation from one who is engaged and confident, offering real-time personalised learning experiences.

The workplace is undergoing change as well. In human resources, job interviews are no longer solely about technical skills but also assess emotional intelligence, adaptability, and stress responses. Companies are increasingly adopting systems that analyse body language and vocal cues to evaluate the authenticity of candidates' answers.

The automotive industry is another sector embracing this revolution. Smart vehicles are integrating systems that interpret the emotional states of drivers and passengers. Advanced sensors and algorithms can detect fatigue, stress, or distraction, prompting adjustments to driving parameters or suggesting breaks to prevent accidents.

How Far Should We Go?

Despite its advancements, EAI raises fundamental ethical questions. Can a machine truly understand the complexity of human emotions without oversimplifying them? Cultural biases in emotional analysis could distort interpretations—what is perceived as anger in one culture may have an entirely different meaning in another, leading to misjudgements.

Privacy is another pressing concern. The collection of emotional biometric data raises critical questions about information security and personal rights. Who has access to this data? How is it used? Could individuals be manipulated based on their emotional responses?

Moreover, the regulation of EAI remains underdeveloped. There are currently no universal legal frameworks defining its boundaries. While some countries are beginning to introduce legislation on privacy and AI-driven emotional analysis, much remains to be done.

Between Algorithms and Emotions

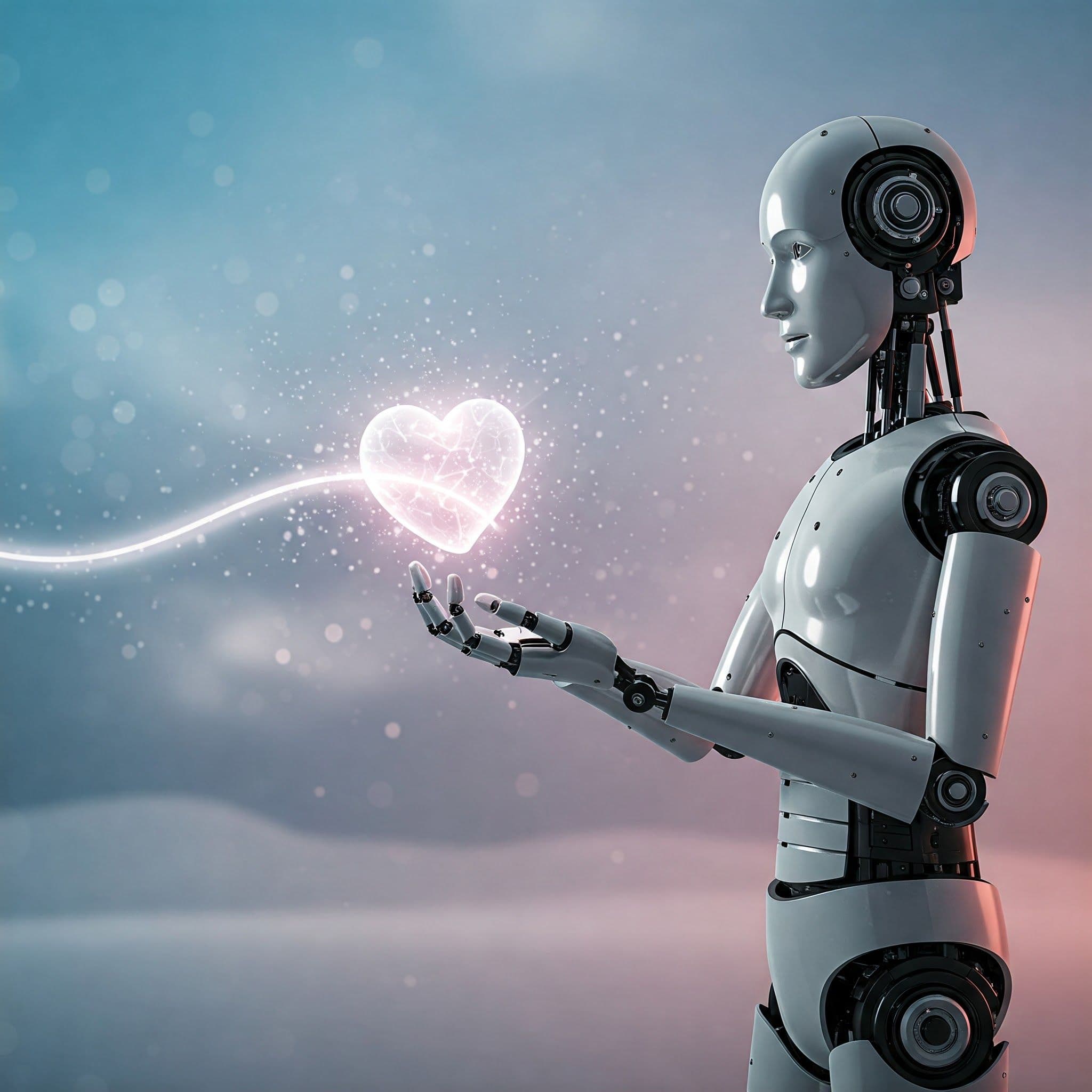

Emotional Artificial Intelligence is more than just a technological tool—it serves as a mirror, compelling us to reflect on the essence of our own humanity. It challenges us to reconsider the nature of empathy, communication, and intelligence in the digital age.

Beyond the dilemmas it presents, EAI holds the potential to enhance our ability to understand and connect with one another. Every algorithm that analyses a facial expression or a change in vocal tone represents an opportunity to improve the way humans and machines interact.

The future of Emotional Artificial Intelligence will depend on how well we balance innovation with ethical responsibility, ensuring that these technologies act as a bridge towards a more connected and empathetic society—rather than a mechanism for control or manipulation.